Knowledge-Enhanced Graph Encoding Method for Metaphor Detection in Text

-

摘要:

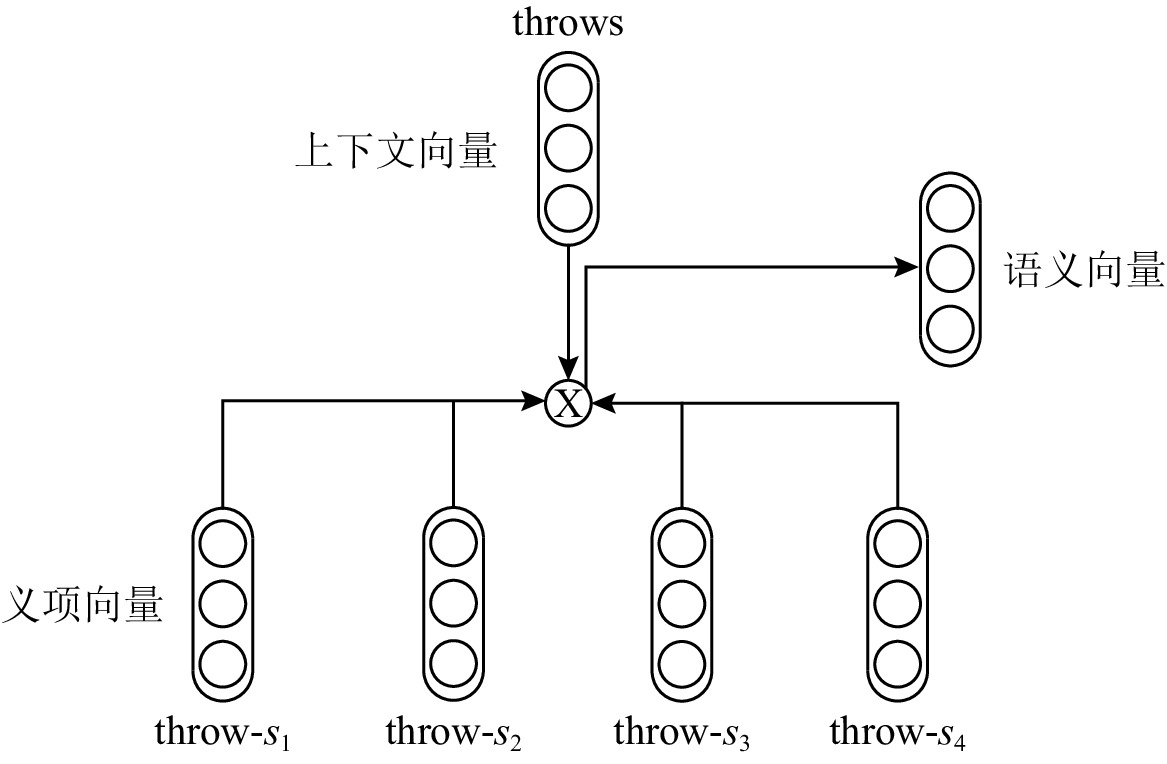

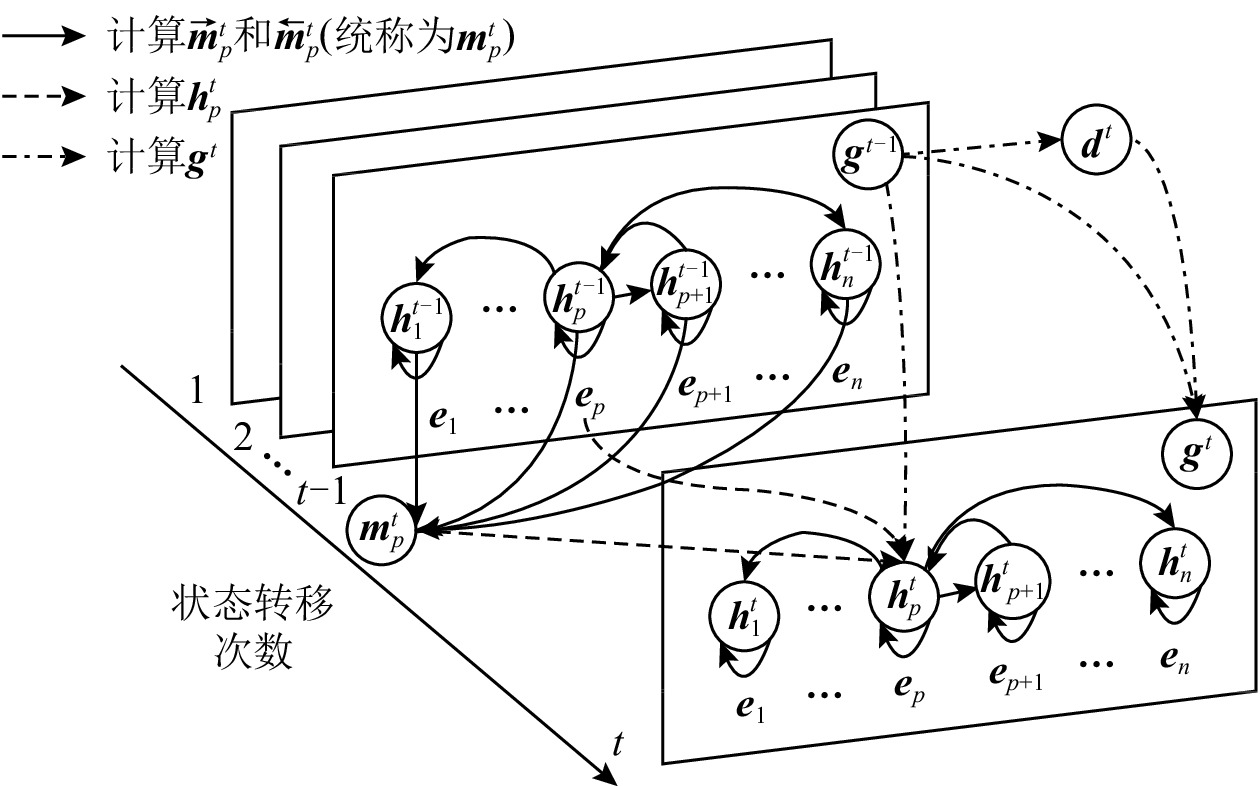

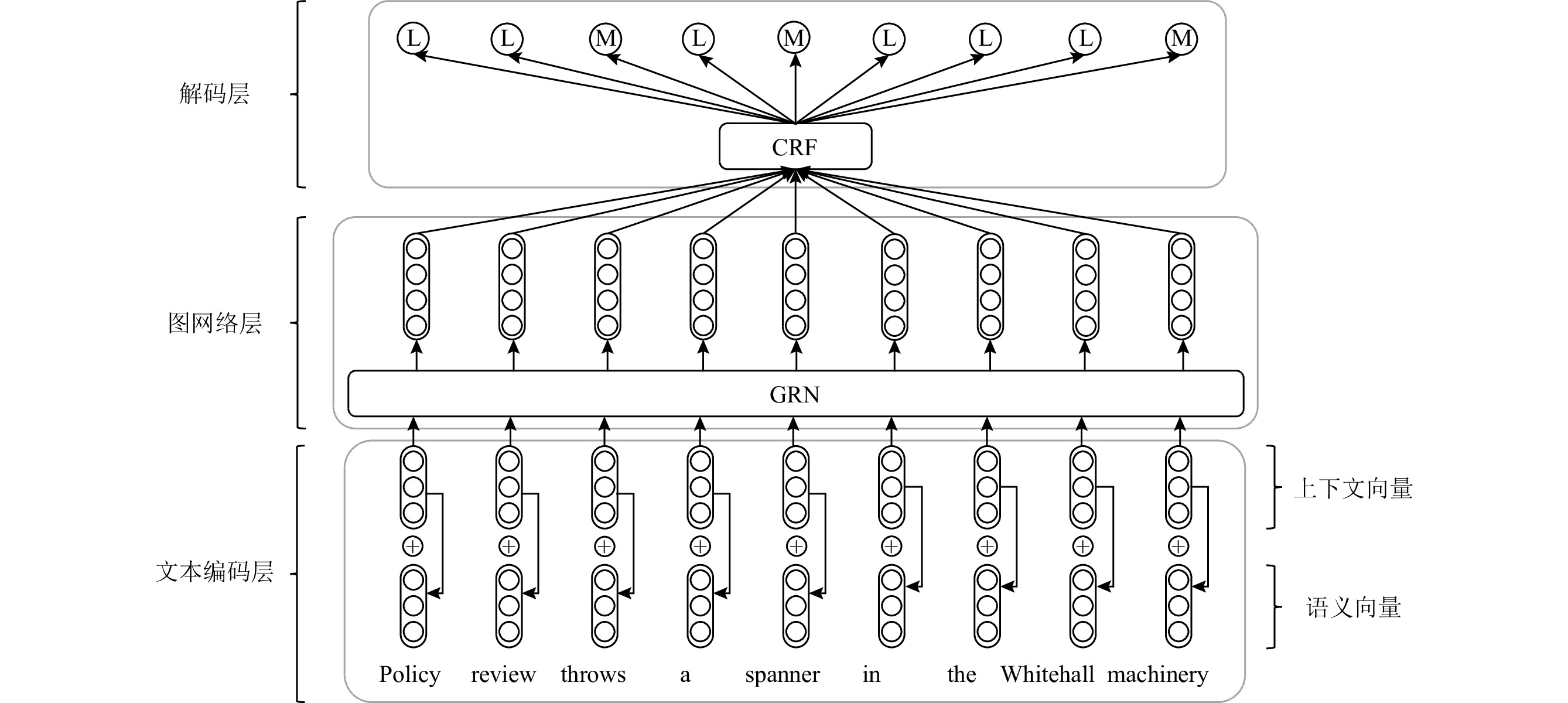

隐喻识别是自然语言处理中语义理解的重要任务之一,目标为识别某一概念在使用时是否借用了其他概念的属性和特点. 由于单纯的神经网络方法受到数据集规模和标注稀疏性问题的制约,近年来,隐喻识别研究者开始探索如何利用其他任务中的知识和粗粒度句法知识结合神经网络模型,获得更有效的特征向量进行文本序列编码和建模.然而,现有方法忽略了词义项知识和细粒度句法知识,造成了外部知识利用率低的问题,难以建模复杂语境.针对上述问题,提出一种基于知识增强的图编码方法(knowledge-enhanced graph encoding method,KEG)来进行文本中的隐喻识别. 该方法分为3个部分:在文本编码层,利用词义项知识训练语义向量,与预训练模型产生的上下文向量结合,增强语义表示;在图网络层,利用细粒度句法知识构建信息图,进而计算细粒度上下文,结合图循环神经网络进行迭代式状态传递,获得表示词的节点向量和表示句子的全局向量,实现对复杂语境的高效建模;在解码层,按照序列标注架构,采用条件随机场对序列标签进行解码.实验结果表明,该方法的性能在4个国际公开数据集上均获得有效提升.

Abstract:Metaphor recognition is one of the essential tasks of semantic understanding in natural language processing, aiming to identify whether one concept is viewed in terms of the properties and characteristics of the other. Since pure neural network methods are restricted by the scale of datasets and the sparsity of human annotations, recent researchers working on metaphor recognition explore how to combine the knowledge in other tasks and coarse-grained syntactic knowledge with neural network models, obtaining more effective feature vectors for sequence coding and modeling in text. However, the existing methods ignore the word sense knowledge and fine-grained syntactic knowledge, resulting in the problem of low utilization of external knowledge and the difficulty to model complex context. Aiming at the above issues, a knowledge-enhanced graph encoding method (KEG) for metaphor detection in text is proposed. This method consists of three parts. In the encoding layer, the sense vector is trained using the word sense knowledge, combined with the context vector generated by the pre-training model to enhance the semantic representation. In the graph layer, the information graph is constructed using fine-grained syntactic knowledge, and then the fine-grained context is calculated. The layer is combined with the graph recurrent neural network, whose state transition is carried out iteratively to obtain the node vector and the global vector representing the word and the sentence, respectively, to realize the efficient modeling of the complex context. In the decoding layer, conditional random fields are used to decode the sequence tags following the sequence labeling architecture. Experimental results show that this method effectively improves the performance on four international public datasets.

-

-

表 1 包含隐喻词汇的文本样例

Table 1 Examples of Sentences with Metaphor Words

编号 文本样例 1 It went to his head rather. 2 How to kill a process? 3 I invested myself fully in this relationship. 4 They forged contacts with those left behind in Germany. 注:黑体单词表示隐喻现象. 表 2 数据集统计信息

Table 2 Statistics of Datasets

数据集 单词总数 句子总数 隐喻总数 隐喻占比/% 平均单词数 VUA-VERB 239847 16189 6554 28.36 14.82 VUA-ALLPOS 239847 16189 15026 15.85 14.82 TroFi 105949 3737 1627 43.54 28.35 MOH-X 5178 647 315 48.68 8.00 表 3 本文实验中使用的超参数

Table 3 Hyper-Parameters Used in Our Experiment

超参数 取值 {{\boldsymbol{e}}^{\rm{c}}}的向量维度 1024 {{\boldsymbol{e}}^{\rm{s}}}的向量维度 200 {\boldsymbol{k}}的向量维度 256 {\boldsymbol{m}},{\boldsymbol{h}},{\boldsymbol{g}}的向量维度 512 状态传递次数K 3 批大小(batch size) 16 BERT模型dropout率 0.5 模型其他部分dropout率 0.4 BERT模型学习率 1E−5 模型其他部分学习率 1E−3 BERT模型{L_2}权重衰减 1E−5 模型其他部分{L_2}权重衰减 1E−3 Warmup迭代轮次 5 最大迭代轮次 50 梯度裁剪 4.0 表 4 KEG与SOTA方法对比的实验结果

Table 4 Experimental Results of KEG and SOTA Methods

% 组别 对比模型 VUA-VERB VUA-ALLPOS TroFi MOH-X P R F1 Acc P R F1 Acc P R F1 Acc P R F1 Acc 第1组 RNN_ELMo[7] 68.2 71.3 69.7 81.4 71.6 73.6 72.6 93.1 70.7 71.6 71.1 74.6 79.1 73.5 75.6 77.2 RNN_BERT[20] 66.7 71.5 69.0 80.7 71.5 71.9 71.7 92.9 70.3 67.1 68.7 73.4 75.1 81.8 78.2 78.1 RNN_HG[6] 69.3 72.3 70.8 82.1 71.8 76.3 74.0 93.6 67.4 77.8 72.2 74.9 79.7 79.8 79.8 79.7 RNN_MHCA[6] 66.3 75.2 70.5 81.8 73.0 75.7 74.3 93.8 68.6 76.8 72.4 75.2 77.5 83.1 80.0 79.8 第2组 Go Figure![9] 73.2 82.3 77.5 72.1 74.8 73.4 DeepMet-S[10] 76.2 78.3 77.2 86.2 73.8 73.2 73.5 90.5 第3组 GCN_BERT[11] 72.3 70.5 70.7 72.0 79.8 79.4 79.3 79.4 MWE[11] 73.8 71.8 72.8 73.5 80.0 80.4 80.2 80.5 本文 KEG 80.5 77.4 78.9 86.7 74.6 76.5 75.5 92.8 75.6 77.8 76.7 76.2 82.1 81.6 81.8 81.6 表 5 对比不同状态传递次数的实验结果

Table 5 Experimental Results With Different State Transition Times

% 状态传递次数K VUA-VERB VUA-ALLPOS TroFi MOH-X P R F1 Acc P R F1 Acc P R F1 Acc P R F1 Acc 1 67.3 72.6 69.8 82.5 71.8 73.3 72.5 91.7 72.6 70.4 71.5 74.6 77.8 79.8 78.8 78.1 2 74.6 75.7 75.1 84.2 73.3 75.7 74.5 92.4 74.1 75.6 74.8 75.3 81.3 80.5 80.9 80.8 3 80.5 77.4 78.9 86.7 74.6 76.5 75.5 92.8 75.6 77.8 76.7 76.2 82.1 81.6 81.8 81.6 4 80.9 77.1 79.0 86.2 75.1 76.0 75.5 92.8 75.8 77.5 76.6 76.3 82.1 81.8 81.9 81.2 5 80.8 77.2 78.9 86.6 74.8 76.1 75.4 93.1 76.1 77.8 76.9 75.3 81.4 82.1 81.7 81.6 表 6 词向量和上下文向量构建方法的实验结果

Table 6 Experimental Results of Constructing Word Vectors and Context Vectors

% 对比模型 VUA-VERB VUA-ALLPOS TroFi MOH-X P R F1 Acc P R F1 Acc P R F1 Acc P R F1 Acc KEG-word2vec 74.2 73.2 73.7 82.3 73.5 74.7 74.1 90.6 74.1 73.5 73.8 74.4 78.5 76.6 77.5 78.6 KEG-GloVe 74.6 73.2 73.9 82.6 73.6 74.7 74.1 90.8 74.0 73.8 73.9 74.2 78.4 76.8 77.6 79.1 KEG-ELMo 76.4 75.5 75.9 84.8 74.2 76.1 75.1 91.7 74.6 75.8 75.2 75.6 80.4 80.3 80.3 80.7 KEG 80.5 77.4 78.9 86.7 74.6 76.5 75.5 92.8 75.6 77.8 76.7 76.2 82.1 81.6 81.8 81.6 表 7 KEG有无词级别义项知识的实验结果

Table 7 Experimental Results of KEG With and Without Lexicon Word Sense Knowledge

% 对比模型 VUA-VERB VUA-ALLPOS TroFi MOH-X P R F1 Acc P R F1 Acc P R F1 Acc P R F1 Acc KEG-NOS 68.2 72.9 70.5 82.7 72.6 73.1 72.8 92.1 73.2 71.5 72.3 75.4 79.4 78.2 78.8 78.8 KEG 80.5 77.4 78.9 86.7 74.6 76.5 75.5 92.8 75.6 77.8 76.7 76.2 82.1 81.6 81.8 81.6 表 8 KEG有无细粒度句法知识的实验结果

Table 8 Experimental Results of KEG With and Without Fine-Grained Syntactic Knowledge

% 对比模型 VUA-VERB VUA-ALLPOS TroFi MOH-X P R F1 Acc P R F1 Acc P R F1 Acc P R F1 Acc KEG-UCG 67.5 71.1 69.3 80.6 70.3 71.3 70.8 89.4 72.5 70.1 71.3 74.0 77.5 76.9 77.2 77.1 KEG-CSK 71.2 70.7 70.9 81.3 72.0 71.4 71.7 90.2 73.1 70.7 71.9 74.7 78.2 78.3 78.2 78.3 KEG 80.5 77.4 78.9 86.7 74.6 76.5 75.5 92.8 75.6 77.8 76.7 76.2 82.1 81.6 81.8 81.6 表 9 2种解码方式的实验结果

Table 9 Experimental Results of Two Decoding Methods

% 对比模型 VUA-VERB VUA-ALLPOS TroFi MOH-X P R F1 Acc P R F1 Acc P R F1 Acc P R F1 Acc KEG-C 79.5 77.3 78.4 86.5 74.2 75.6 74.9 92.2 75.8 76.3 76.0 75.9 82.0 81.2 81.6 81.5 KEG 80.5 77.4 78.9 86.7 74.6 76.5 75.5 92.8 75.6 77.8 76.7 76.2 82.1 81.6 81.8 81.6 -

[1] Shutova E. Models of metaphor in NLP[C] //Proc of the 48th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2010: 688−697 [2] Jang H, Moon S, Jo Y, et al. Metaphor detection in discourse[C] //Proc of the 16th Annual Meeting of the Special Interest Group on Discourse and Dialogue. Stroudsburg, PA: ACL, 2015: 384−392

[3] Klebanov B, Leong C, Gutiérrez E, et al. Semantic classifications for detection of verb metaphors[C] //Proc of the 54th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2016: 101−106

[4] Bulat L, Clark S, Shutova E. Modelling metaphor with attribute-based semantics[C] //Proc of the 15th Conf of the European Chapter of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2017: 523−528

[5] Shutova E, Kiela D, Maillard J. Black holes and white rabbits: Metaphor identification with visual features[C] //Proc of the 2016 Conf of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Stroudsburg, PA: ACL, 2016: 160−170 [6] Mao Rui, Lin Chenghua, Guerin F. End-to-end sequential metaphor identification inspired by linguistic theories[C] //Proc of the 57th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2019: 3888−3898

[7] Gao Ge, Choi E, Choi Y, et al. Neural metaphor detection in context[C] //Proc of the 2018 Conf on Empirical Methods in Natural Language Processing. Stroudsburg, PA: ACL, 2018: 607−613 [8] Rei M, Bulat L, Kiela D, et al. Grasping the finer point: A supervised similarity network for metaphor detection[C] //Proc of the 2017 Conf on Empirical Methods in Natural Language Processing. Stroudsburg, PA: ACL, 2017: 1537−1546 [9] Chen Xianyang, Leong C, Flor M, et al. Go Figure! Multi-task transformer-based architecture for metaphor detection using idioms: ETS team in 2020 metaphor shared task[C] //Proc of the 2nd Workshop on Figurative Language Processing, Fig-Lang@ACL 2020. Stroudsburg, PA: ACL, 2020: 235−243 [10] Su Chuandong, Fukumoto F, Huang Xiaoxi, et al. DeepMet: A reading comprehension paradigm for token-level metaphor detection[C] //Proc of the 2nd Workshop on Figurative Language Processing, Fig-Lang@ACL 2020. Stroudsburg, PA: ACL, 2020: 30−39

[11] Rohanian O, Rei M, Taslimipoor S, et al. Verbal multiword expressions for identification of metaphor[C] //Proc of the 58th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2020: 2890−2895

[12] Leong C, Klebanov B, Shutova E. A report on the 2018 VUA metaphor detection shared task[C] //Proc of the 1st Workshop on Figurative Language Processing, Fig-Lang@NAACL-HLT 2018. Stroudsburg, PA: ACL, 2018: 56−66

[13] Birke J, Sarkar A. A clustering approach for nearly unsupervised recognition of nonliteral language[C] //Proc of the 11th Conf of the European Chapter of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2017: 523−528

[14] Dunn J. Measuring metaphoricity[C] //Proc of the 52nd Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2014: 745−751

[15] Gutiérrez E, Shutova E, Marghetis T, et al. Literal and metaphorical senses in compositional distributional semantic models[C] //Proc of the 54th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2016: 183−193

[16] Mohammad S, Shutova E, Turney P. Metaphor as a medium for emotion: An empirical study[C] //Proc of the 5th Joint Conf on Lexical and Computational Semantics, *SEM@ACL 2016. Stroudsburg, PA: ACL, 2016: 23−33

[17] Tsvetkov Y, Boytsov L, Gershman A, et al. Metaphor detection with cross-lingual model transfer[C] //Proc of the 52nd Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2014: 248−258

[18] Wilks Y. A preferential, pattern-seeking, semantics for natural language inference[J]. Artificial Intelligence, 1975, 6(1): 53−74 doi: 10.1016/0004-3702(75)90016-8

[19] Pragglejaz Group. MIP: A method for identifying metaphorically used words in discourse[J]. Metaphor and Symbol, 2007, 22(1): 1−39 doi: 10.1080/10926480709336752

[20] Devlin J, Chang M, Lee K, et al. BERT: Pre-training of deep bidirectional transformers for language understanding[C] //Proc of the 2019 Conf of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Stroudsburg, PA: ACL, 2019: 4171−4186 [21] Vaswani A, Shazzer N, Parmar N, et al. Attention is all you need[C] //Proc of the Advances in Neural Information Processing Systems 30: Annual Conf on Neural Information Processing Systems 2017. San Diego, CA: NIPS, 2017: 5998−6008 [22] Liu Yinhan, Ott M, Goyal N, et al. RoBERTa: A robustly optimized BERT pretraining approach [J]. arXiv preprint, arXiv: 1907.11692, 2019

[23] Leong C, Klebanov B, Hamill C, et al. A report on the 2020 VUA and TOEFL metaphor detection shared task[C] //Proc of the 2nd Workshop on Figurative Language Processing, Fig-Lang@ACL 2020. Stroudsburg, PA: ACL, 2020: 18−29

[24] Kipf T, Welling M. Semi-supervised classification with graph convolutional networks[C/OL] //Proc of the 5th Int Conf on Learning Representations. La Jolla, CA: ICLR, 2017 [2018-05-20]. https://openreview.net/pdf?id=SJU4ayYgl

[25] Steen G, Dorst A, Herrmann J, et al. A method for linguistic metaphor identification: From MIP to MIPVU[M] //Converging Evidence in Language and Communication Research, Volume 14. Amsterdam: John Benjamins Publishing Company, 2010: 25−42

[26] Klebanov B, Leong C, Flor M. A corpus of non-native written english annotated for metaphor[C] //Proc of the 2018 Conf of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Stroudsburg, PA: ACL, 2018: 86−91 [27] Levin L, Mitamura T, MacWhinney B, et al. Resources for the detection of conventionalized metaphors in four languages[C] //Proc of the 9th Int Conf on Language Resources and Evaluation. Paris: LREC, 2014: 498−501

[28] Mohler M, Brunson M, Rink B, et al. Introducing the LCC metaphor datasets[C] //Proc of the 10th Int Conf on Language Resources and Evaluation. Paris: LREC, 2016: 4221−4227

[29] Parde N, Nielsen R. A corpus of metaphor novelty scores for syntactically-related word pairs[C] //Proc of the 11th Int Conf on Language Resources and Evaluation. Paris: LREC, 2018: 1535−1540

[30] Tredici M, Bel N. Assessing the potential of metaphoricity of verbs using corpus data[C] //Proc of the 10th Int Conf on Language Resources and Evaluation. Paris: LREC, 2016: 4573−4577

[31] Chen Xinxiong, Liu Zhiyuan, Sun Maosong. A unified model for word sense representation and disambiguation[C] //Proc of the 2014 Conf on Empirical Methods in Natural Language Processing. Stroudsburg, PA: ACL, 2014: 1025−1035 [32] Mikolov T, Sutskever I, Chen Kai, et al. Distributed representations of words and phrases and their compositionality[C] //Proc of the Advances in Neural Information Processing Systems 26: Annual Conf on Neural Information Processing Systems 2013. San Diego, CA: NIPS, 2013: 3111−3119 [33] Marcheggiani D, Titov I. Encoding sentences with graph convolutional networks for semantic role labeling[C] //Proc of the 2017 Conf on Empirical Methods in Natural Language Processing. Stroudsburg, PA: ACL, 2017: 1506−1515 [34] Liu Xiao, Luo Zhunchen, Huang Heyan. Jointly multiple events extraction via attention-based graph information aggregation[C] //Proc of the 2018 Conf on Empirical Methods in Natural Language Processing. Stroudsburg, PA: ACL, 2018: 1247−1256 [35] Zhang Yue, Liu Qi, Song Linfeng. Sentence-state LSTM for text representation[C] //Proc of the 56th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2018: 317−327

[36] Song Linfeng, Zhang Yue, Wang Zhiguo, et al. A graph-to-sequence model for AMR-to-text generation[C] //Proc of the 56th Annual Meeting of the Association for Computational Linguistics. Stroudsburg, PA: ACL, 2018: 1616−1626

[37] Cho K, Merrienboer B, Gülçehre Ç, et al. Learning phrase representations using RNN encoder-decoder for statistical machine translation[C] //Proc of the 2014 Conf on Empirical Methods in Natural Language Processing. Stroudsburg, PA: ACL, 2014: 1724−1734 [38] Lafferty J, McCallum A, Pereira F. Conditional random fields: Probabilistic models for segmenting and labeling sequence data[C] //Proc of the 18th Int Conf on Machine Learning. San Diego, CA: ICML, 2001: 282−289

[39] Linguistic Data Consortium. BLLIP 1987-89 WSJ Corpus Release 1[DB/OL]. [2020-12-28]. https://catalog.ldc.upenn.edu/LDC2000T43

[40] Loshchilov I, Hutter F. Decoupled weight decay regularization[C/OL] //Proc of the 7th Int Conf on Learning Representations. La Jolla, CA: ICLR, 2019 [2020-01-10]. https://openreview.net/pdf?id=Bkg6RiCqY7

[41] Pennington J, Socher R, Manning C. GloVe: Global vectors for word representation[C] //Proc of the 2014 Conf on Empirical Methods in Natural Language Processing. Stroudsburg, PA: ACL, 2014: 1532−1543 [42] Peters M, Neumann M, Iyyer M, et al. Deep contextualized word representations[C] //Proc of the 2018 Conf of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. Stroudsburg, PA: ACL, 2018: 2227−2237 -

期刊类型引用(12)

1. 郭锋,刘飞洋,刘鸽. LoongArch处理器存储管理技术研究与实现. 电脑编程技巧与维护. 2025(03): 82-84 .  百度学术

百度学术

2. 邢世远,张见齐,王焕东,吴学智,吴瑞阳. 片间互连总线协议层关键技术研究. 高技术通讯. 2025(02): 113-123 .  百度学术

百度学术

3. 舒燕君,郑翔宇,徐成华,黄沛,王永琪,周凡,张展,左德承. 面向LoongArch边界检查访存指令的GCC优化. 计算机研究与发展. 2025(05): 1136-1150 .  本站查看

本站查看

4. 孙东华,刘亚冬. 基于龙芯3A5000的全国产化EtherCAT主站控制器设计. 现代信息科技. 2025(08): 16-19+24 .  百度学术

百度学术

5. 谢汶兵,田雪,漆锋滨,武成岗,王俊,罗巧玲. 二进制翻译技术综述. 软件学报. 2024(06): 2687-2723 .  百度学术

百度学术

6. 谢君,陈汉云,袁璐,张梦娇,王增,石锐. 基于信创的医院自助服务系统建设探索. 中国数字医学. 2024(07): 41-45+69 .  百度学术

百度学术

7. 谭弘泽,王剑. 基于动态压缩的高存储效率末级分支目标缓冲. 高技术通讯. 2024(07): 671-680 .  百度学术

百度学术

8. 刘登峰,李东亚,柴志雷,周浩杰,丁海峰. 基于QEMU的SIMD指令替换浮点指令框架. 湖南大学学报(自然科学版). 2024(08): 70-77 .  百度学术

百度学术

9. 陈国良,汤晓宇,尤帅,姚小良,梅超君,林时俊,刘尚东,吴少刚,孙雅薇,王汝传,季一木. 基于国产处理器的智能大数据一体机架构及应用研究. 南京邮电大学学报(自然科学版). 2024(04): 1-16 .  百度学术

百度学术

10. 贾金成,朱家鑫,唐震,王志鹏,王伟. 映射字典导向的64位ARM到RISC-V汇编翻译. 小型微型计算机系统. 2024(08): 2041-2048 .  百度学术

百度学术

11. 游英杰,刘宣佑,唐文武,张统兵,王岩. 基于CPU的PCIe驱动及与DSP和FPGA的交互设计. 火控雷达技术. 2024(04): 88-93+123 .  百度学术

百度学术

12. 王一泠,吴琦,安军社. 支持MIPS架构的轻量型开源鸿蒙系统移植. 计算机工程. 2023(12): 25-34+45 .  百度学术

百度学术

其他类型引用(23)

下载:

下载: